Introduction

I’ve been experimenting with the Model Context Protocol (MCP) for a while now, and it’s changed how I think about extending AI assistants like GitHub Copilot.

Then, Microsoft recently released a stable version of the new MCP trigger for Azure Functions. It made building a cloud-native, serverless MCP server incredibly straightforward. As soon as I saw it, I had an idea: what if I could use this to build a simple, cloud-based tool to save and retrieve notes directly from Copilot?

So I built one using Azure Functions. This post walks through how I created a production-ready MCP server in the cloud, complete with authentication, monitoring, and infrastructure as code. The example is a note management system, but the patterns apply to any MCP server you might want to build.

Why Azure Functions for MCP?

Azure Functions provides several advantages for hosting MCP servers:

- Serverless architecture: Pay only for what you use, with automatic scaling

- Zero infrastructure management: Focus on code, not servers

- Managed Identity support: Secure, passwordless authentication to Azure services

- Built-in monitoring: Application Insights integration out of the box

The Model Context Protocol supports HTTP endpoints, making Azure Functions a perfect fit. The MCP HTTP transport allows clients like VSCode to connect to your server via a simple URL, whether it’s running locally or in the cloud.

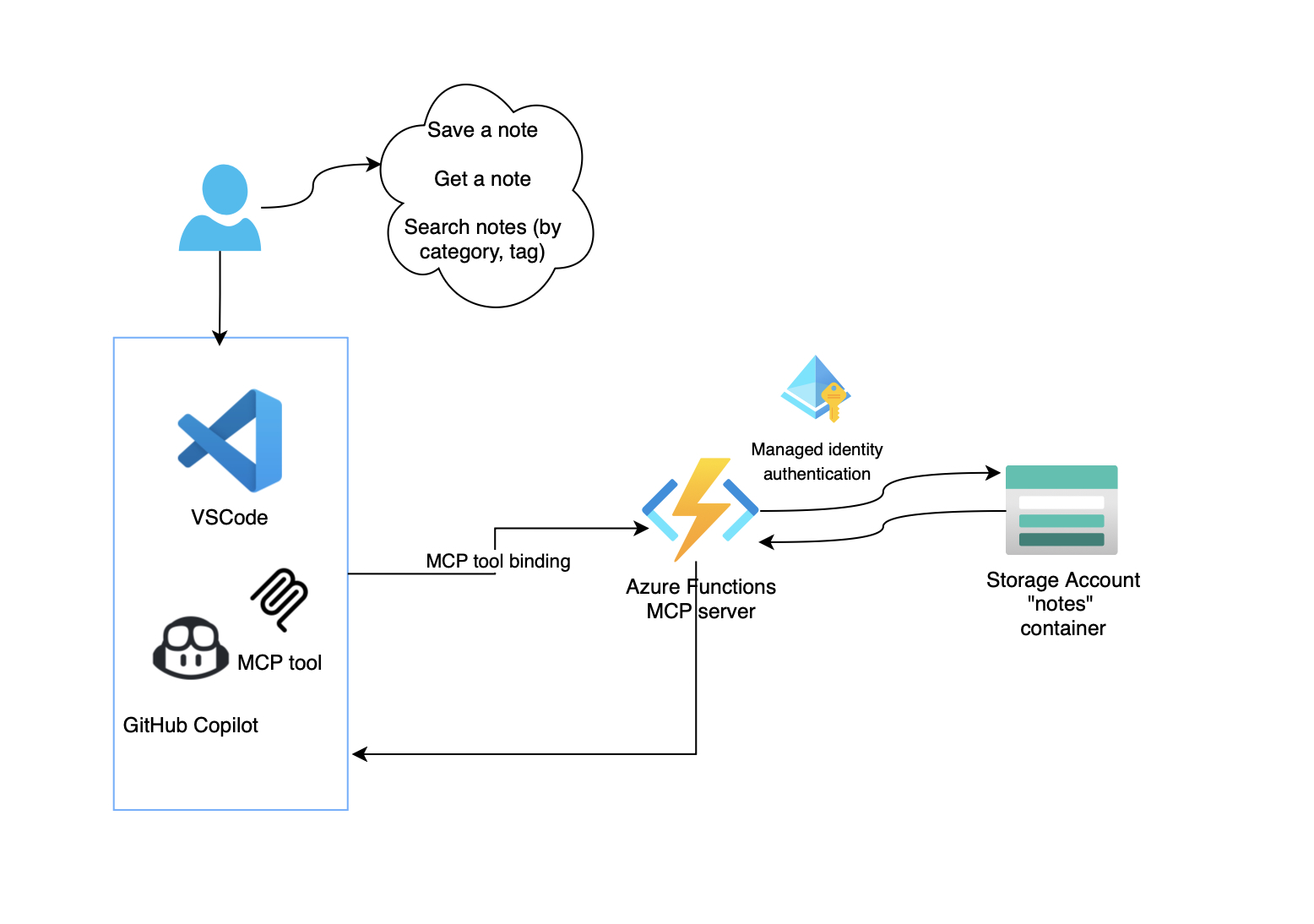

The architecture

The solution I built is a note management system that extends GitHub Copilot with custom tools. Here’s what makes up the architecture:

Key components:

- Azure Function App (Flex Consumption): Hosts the MCP endpoints

- Azure Blob Storage: Stores notes as JSON files

- User-Assigned Managed Identity: Provides secure, passwordless access

- Application Insights: Monitors performance and errors

- Bicep templates: Infrastructure as Code for reproducible deployments

Implementing MCP Tools in C#

Saving a Note

[Function(nameof(SaveNote))]

[BlobOutput("notes/{mcptoolargs.title}.json")]

public string SaveNote(

[McpToolTrigger("save_note", "Saves a note with a title, category, tags, and content")]

ToolInvocationContext context,

[McpToolProperty("title", "The title or identifier for the note", true)]

string title,

[McpToolProperty("category", "The category of the note")]

string category,

[McpToolProperty("tags", "Comma-separated tags for organizing the note")]

string tags,

[McpToolProperty("content", "The main content of the note", true)]

string content)

{

var note = new

{

Title = title,

Category = category ?? "general",

Tags = tags?.Split(',').Select(t => t.Trim()).ToList() ?? [],

Content = content,

CreatedAt = DateTime.UtcNow,

UpdatedAt = DateTime.UtcNow

};

return JsonSerializer.Serialize(note, new JsonSerializerOptions { WriteIndented = true });

}

Notice the use of attributes:

[McpToolTrigger]- Declares this as an MCP tool with a name and description[McpToolProperty]- Defines each parameter that Copilot can pass to the tool[BlobOutput]- Automatically saves the return value to Blob Storage

Retrieving a Note

[Function(nameof(GetNote))]

public string GetNote(

[McpToolTrigger("get_note", "Retrieves a note by its title")]

ToolInvocationContext context,

[McpToolProperty("title", "The title of the note to retrieve", true)]

string title,

[BlobInput("notes/{mcptoolargs.title}.json")]

string noteContent)

{

if (string.IsNullOrEmpty(noteContent))

{

return JsonSerializer.Serialize(new { error = "Note not found", title });

}

return JsonSerializer.Serialize(new

{

title,

found = true,

content = noteContent

});

}

The [BlobInput] binding automatically retrieves the note from storage using the title parameter. The {mcptoolargs.title} placeholder dynamically constructs the blob path.

Infrastructure as Code with Bicep

User-Assigned Managed Identity

module apiUserAssignedIdentity 'br/public:avm/res/managed-identity/user-assigned-identity:0.4.2' = {

name: 'apiUserAssignedIdentity'

scope: rg

params: {

location: location

tags: tags

name: '${abbrs.managedIdentityUserAssignedIdentities}api-${resourceToken}'

}

}

Storage Account with RBAC

module storage 'br/public:avm/res/storage/storage-account:0.27.1' = {

name: 'storage'

scope: rg

params: {

name: storageAccountActualName

location: location

skuName: 'Standard_LRS'

blobServices: {

containers: [

{ name: deploymentStorageContainerName }

{ name: 'notes' }

]

}

allowSharedKeyAccess: false // Enforce managed identity only

minimumTlsVersion: 'TLS1_2'

}

}

// Grant the Function App's identity access to blob storage

module blobRoleAssignment 'app/rbac/storage-access.bicep' = {

name: 'blobRoleAssignmentapi'

scope: rg

params: {

storageAccountName: storage.outputs.name

roleDefinitionID: 'b7e6dc6d-f1e8-4753-8033-0f276bb0955b' // Storage Blob Data Owner

principalID: apiUserAssignedIdentity.outputs.principalId

}

}

Notice how I disabled shared key access (allowSharedKeyAccess: false). This enforces the use of managed identities, eliminating the need to manage connection strings or access keys, an important security improvement.

Using with GitHub Copilot

Once deployed, you can integrate the MCP server with VSCode by creating a .vscode/mcp.json file:

For local development:

{

"servers": {

"notes-mcp": {

"type": "http",

"url": "http://localhost:7071/runtime/webhooks/mcp"

}

}

}

For production:

{

"inputs": [

{

"type": "promptString",

"id": "function-key",

"description": "Azure Function App Key",

"password": true

}

],

"servers": {

"notes-mcp": {

"type": "http",

"url": "https://your-app.azurewebsites.net/runtime/webhooks/mcp",

"headers": {

"x-functions-key": "${input:function-key}"

}

}

}

}

Then in VSCode, you can interact with your notes through GitHub Copilot:

- “Save this code snippet as a note titled ‘authentication-pattern’ with tags ‘security,oauth’”

- “Get my note called authentication-pattern”

- “Search notes with tag ‘security’”

- “List all my notes”

Deployment with Azure Developer CLI

The project uses Azure Developer CLI (azd) for streamlined deployments:

# Initialize and authenticate

azd init

azd auth login

# Deploy everything

azd up

This single command:

- Creates the resource group

- Deploys all infrastructure (storage, function app, monitoring)

- Configures RBAC permissions

- Deploys the application code

- Outputs the Function App URL

To remove all the resources when done:

azd down --purge

What’s next?

This implementation opens up many possibilities:

- Multi-tenant support: Use Azure AD to segregate notes by user

- Advanced search: Integrate Azure Cognitive Search for full-text search

- Cosmos DB integration: For more complex querying and relationships

- Collaborative features: Share notes across teams with access control

- Additional tools: Add tools for task management, bookmarks, or code snippets

Conclusion

Building an MCP server with Azure Functions demonstrates how serverless technologies can extend AI assistants in powerful ways. The combination of MCP’s extensibility and Azure’s cloud-native services creates a scalable, secure, and cost-effective solution.

Whether you’re building internal tools for your team or experimenting with AI extensibility, this pattern provides a solid groundwork. The serverless model means you can start small and scale as needed without worrying about infrastructure.

Check out the complete source code: azure-functions-notes-mcp-server

Have you built your own MCP server? What tools are you extending GitHub Copilot with? Let me know in the comments!

Want to learn more about MCP? Check out the Model Context Protocol documentation and explore the growing ecosystem of MCP servers.