Smooth Sailing with DevOps: Deploying Azure Storage Account using Terraform and Azure YAML Pipelines

Overview

Introduction

Hi 👋 my fellow tech enthusiasts. As a DevOps Engineer, I often find myself navigating the complex waters of IT challenges both at work and during my free time. Today, I want to share a recent journey into the world of DevOps where we'll be deploying an Azure Storage Account using Terraform and Azure YAML Pipelines. So, grab your virtual life vests, and let's embark on this adventure.

Why Terraform and Azure YAML Pipelines?

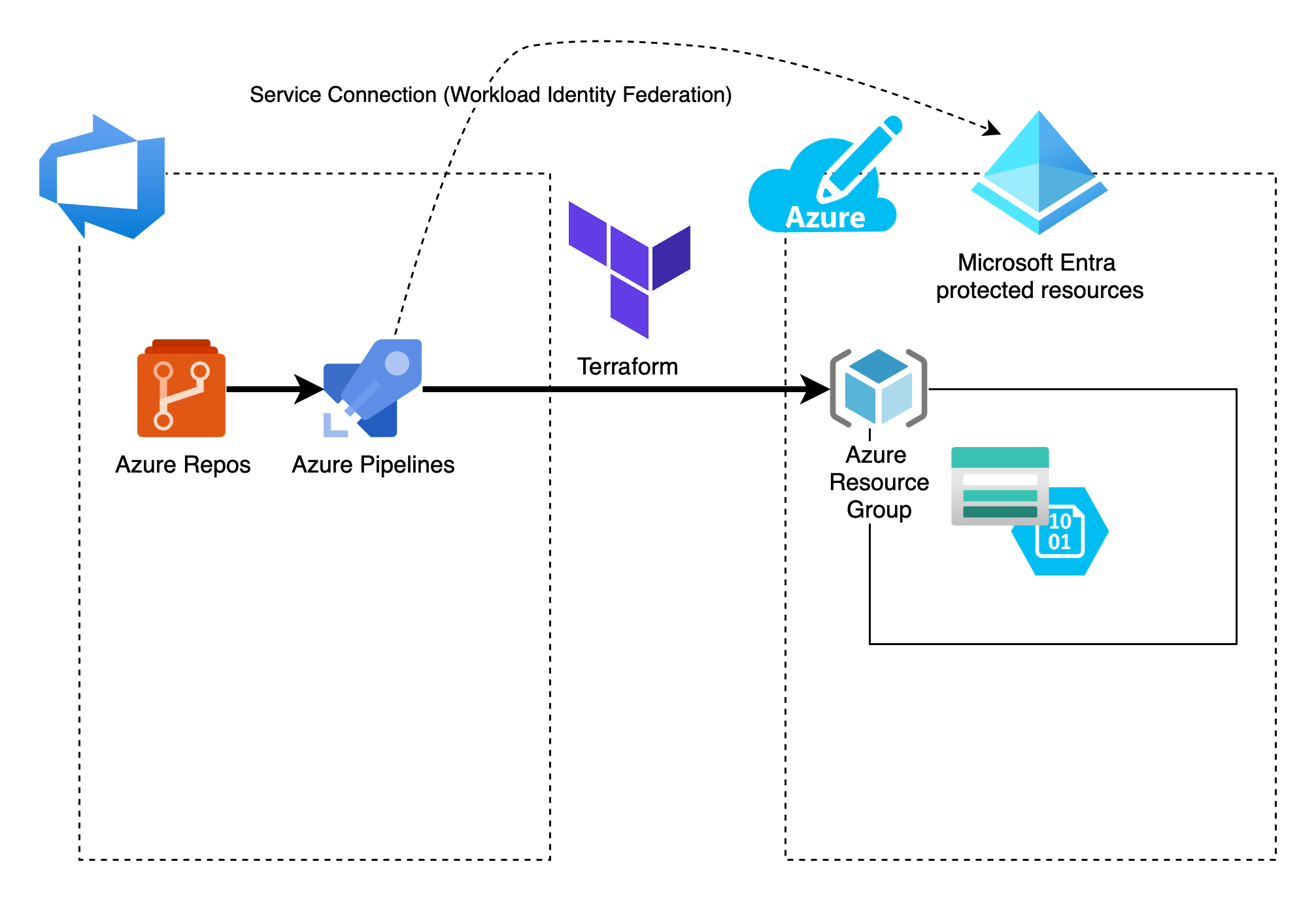

Terraform, an Infrastructure as Code (IaC) tool, is a powerful choice for provisioning and managing cloud resources. When combined with Azure DevOps YAML Pipelines, it becomes an even more powerful force for automating deployments, ensuring consistency, and facilitating collaboration within your DevOps team.

The Toolkit

Before we dive into the details, let's ensure our toolkit is well-prepared:

- Azure DevOps: Create an Azure DevOps project if you don't already have one. We'll be using Azure YAML Pipelines for our deployment.

- Workload Identity Federation: Create a Service Connection with Workload Identity Federation to authenticate with your Azure subscription. I recently wrote an article about setting up this type of Service Connection.

- Azure Storage Account: You'll need a place to store your Terraform state files. We'll create this as part of the process.

- Azure Repos: We'll store our Terraform configurations in an Azure Repos Git repository within your Azure DevOps project.

Find the necessary files in my [GitHub project](https://github.com/arashjalalat/deploy-storage-terraform-azure-pipelines?ref=ajtech.nl)

Step 1: Terraform Configuration

Create a directory for your Terraform configuration and place a .tf file with the configuration for the Azure Storage Account. Don't be confused with the remote storage account on Azure to store the Terraform state. That will be part of the next step. This Terraform configuration will deploy an Azure resource group, storage account and a private container inside the storage account. The name of the storage account must be unique within Azure. That's why the name is randomly created starting with hcl and 8 random characters. Also the backend configuration is empty since this will be added from the command line in the next step. Here's a simple example:

1terraform {

2 backend "azurerm" {}

3}

4

5provider "azurerm" {

6 features {}

7}

8

9resource "random_id" "storage_account" {

10 byte_length = 8

11}

12

13resource "azurerm_resource_group" "hcl-rg" {

14 name = "hcl-resources"

15 location = "West Europe"

16}

17

18resource "azurerm_storage_account" "hcl-sa" {

19 name = "hcl${lower(random_id.storage_account.hex)}"

20 resource_group_name = azurerm_resource_group.hcl-rg.name

21 location = azurerm_resource_group.hcl-rg.location

22 account_tier = "Standard"

23 account_replication_type = "LRS"

24}

25

26resource "azurerm_storage_container" "hcl-ct" {

27 name = "hcl-container"

28 storage_account_name = azurerm_storage_account.hcl-sa.name

29 container_access_type = "private"

30}

31

32output "connection_string" {

33 value = nonsensitive(azurerm_storage_account.hcl-sa.primary_connection_string)

34}

Step 2: Azure YAML Pipeline Configuration

In your Azure DevOps project, create an Azure YAML Pipeline to deploy your Terraform configuration. Change the values as desired. This pipeline will first create an Azure Storage Account for the Terraform state with Azure CLI. State allows Terraform to know what Azure resources to add, update or destroy. As part of the storage account a blob container is created to store the state files. When the storage account is created the account key is stored in a variable which will be consumed by second Azure CLI task.

Terraform init will initialise a work directory and configuration files, the Terraform plan let you create an execution plan that allows you to preview your infrastructure changes before they are deployed to Azure and Terraform apply will execute the plan to deploy the infrastructure. Finally, it includes a destroy step for cleanup.

Here's a pipeline configuration:

1trigger: none

2

3variables:

4 - name: RESOURCE_GROUP_NAME

5 value: tfstaterg

6 - name: STORAGE_ACCOUNT_NAME

7 value: tfstatesatraining

8 - name: CONTAINER_NAME

9 value: tfstateblobdemo

10

11pool:

12 vmImage: ubuntu-latest

13

14steps:

15- task: AzureCLI@2

16 displayName: TF remote state storage account

17 inputs:

18 azureSubscription: 'test-automatic'

19 scriptType: 'pscore'

20 scriptLocation: 'inlineScript'

21 inlineScript: |

22 # Create resource group

23 az group create --name $(RESOURCE_GROUP_NAME) --location westeurope

24

25 # Create storage account

26 az storage account create `

27 --resource-group $(RESOURCE_GROUP_NAME) `

28 --name $(STORAGE_ACCOUNT_NAME) `

29 --sku Standard_LRS `

30 --allow-blob-public-access false

31

32 # Create blob container

33 az storage container create --name $(CONTAINER_NAME) --account-name $(STORAGE_ACCOUNT_NAME)

34

35 $ACCOUNT_KEY=$(az storage account keys list `

36 --resource-group $(RESOURCE_GROUP_NAME) `

37 --account-name $(STORAGE_ACCOUNT_NAME) `

38 --query '[0].value' -o tsv)

39

40 Write-Host "##vso[task.setvariable variable=ARM_ACCESS_KEY;]$ACCOUNT_KEY"

41

42- task: AzureCLI@2

43 displayName: Terraform deploy az resources

44 inputs:

45 azureSubscription: 'test-automatic'

46 scriptType: 'pscore'

47 scriptLocation: 'inlineScript'

48 inlineScript: |

49 terraform init `

50 -backend-config="resource_group_name=$(RESOURCE_GROUP_NAME)" `

51 -backend-config="storage_account_name=$(STORAGE_ACCOUNT_NAME)" `

52 -backend-config="container_name=$(CONTAINER_NAME)" `

53 -backend-config="key=$(ARM_ACCESS_KEY)"

54

55 terraform plan -input=false -out=tfplan

56

57 terraform apply -auto-approve tfplan

58

59 terraform destroy -auto-approve

60 workingDirectory: $(System.DefaultWorkingDirectory)/terraform

Step 3: Execution

Commit your Terraform configuration to your Azure Repos repository and trigger the Azure YAML Pipeline. It will automatically provision your Azure Storage Account based on your Terraform configuration.

Conclusion

Deploying Azure resources using Terraform and Azure YAML Pipelines is a journey worth embarking on. It empowers DevOps engineers to automate infrastructure provisioning, ensure consistency, and collaborate seamlessly. In this blog, we've just scratched the surface, but the possibilities are endless.

So, hoist your sails and explore the boundless sea of DevOps automation. Happy coding, and may your deployments be as smooth as a calm ocean on a sunny day!

That's all for this month's IT challenge. Stay tuned for more exciting tech adventures in the world of DevOps!